Infrared (IR) Diodes are very popular now for illuminating the eye for eye-tracking purposes. Of course, projecting stuff into your eye comes with hazards, and we want to make sure these things are safe. I've been working on an IR blink sensor, and while many papers in academia seem to assume safety with a low power IR diode, I wanted to be a little more rigorous– especially since we're attempting to have them worn all day, every day by people.

The laissez faire attitude around Near IR is not totally unwarranted– IR diodes do at least partially deserve their innocuous reputation. IR is what we think of as heat, and we get a lot of it from the sun (the majority of the energy from the sun at the earth's surface is IR). From what remains, ~40% of the sun's energy is visible light, and only a couple percent is UV (the actually dangerous stuff that gives you cancer and contributes to macular degeneration). UV is typically the one we really need to worry about.

While we get quite a bit of IR radiation in the ambient world, we do need to worry about how it interacts with our eyes. Our eyes have a few parts– the outer part is the cornea, which envelopes the entire eye; under the cornea, there is a little pocket of fluid called the aqueous humor which covers the pupil and the pigmented iris that surrounds it. Within the pupil is a lens that will focus light onto the retina. Each of these parts can be damaged, and will have a different mode of damage.

Causes of Concern

Increased IR exposure does put you at increased risk of cataracts. Cataracts are pretty common (68% of people over 80 have them in the US), and they occur when the lens of the eye gets cloudy. IR is believed to contribute to their formation if the lens of the eye absorbs the IR light and heats up. In 'Infrared radiation and cataract II. Epidemiologic investigation of glass workers', we find that folks working in the glass industry for 20 years have >10 times the risk of cataracts. Similar studies have been done on steel and iron workers.

It is also possible to damage the retina of the eye with IR radiation, though the data here seems less clear. Other wavelengths are more tightly coupled to age related macular degeneration– near IR exposure may actually improve retinal function as you age. Based on a recent nature paper entitled 'Does infrared or ultraviolet light damage the lens?', it seems that photochemical damage is unlikely, and we really only need to worry about thermal effects would lead to retinal damage. This means the typical relationship for photochemical injury– where damage is integrated over intensity/duration– doesn't apply. Instead, thermal damage only happens when tissue heats beyond a certain point.

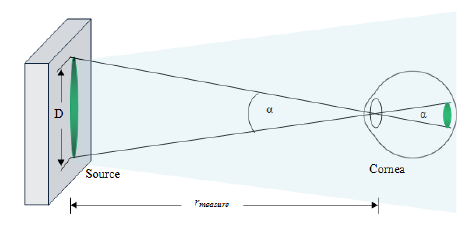

This can happen with a large burst of coherent light (much like a visible laser). This is really only a concern for the nearest of the near IR, because these wavelengths are close enough to visible wavelengths to actually make it through the lens of the eye to the retina (as you get higher wavelengths, they simply get absorbed by the cornea and lens and don't pass through). Of course, the lens of the eye focuses light on the retina, and this can have a hugely multiplicative effect on concentrated hot spots (focusing the power up to 100,000 times more than what would be seen at the cornea). This focusing works exactly like visible light, and the distance of the source is important to how focused it will be. From 'Class I infrared eye blinking detector' we find the following:

In case of a point-type and diverging beam source, as a LED, the hazard increases with decreasing distance between the beam source and the eye. This is true until distance is greater than the shortest focal length. Thus for distance less than the shortest focal length there is a rapid growth of the retinal image and a corresponding reduction of the irradiance, even though more power may be collected.

So it turns out there is actually a complicated relationship beyond just distance to irradiance at the retina, which has to do with the focal effects and optics of the lens.

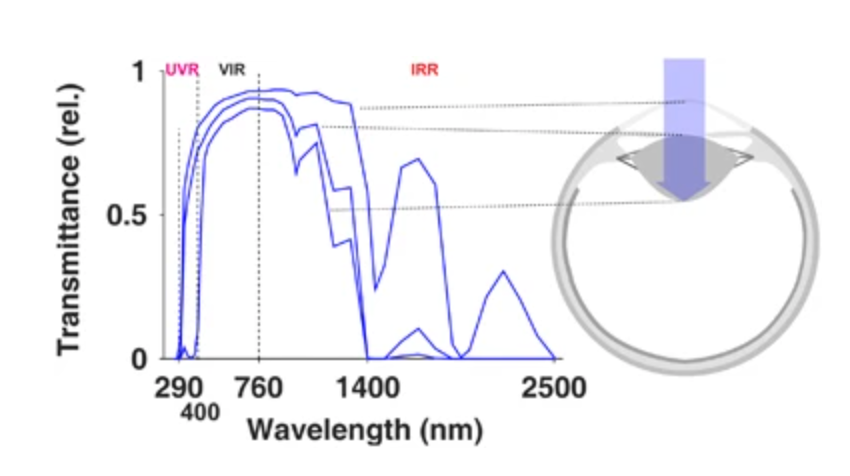

The paper 'Eye safety related to near infrared radiation exposure to biometric devices' suggests that IR-induced warmth on the outer eye will cause pain and blinks to conduct heat away, and that the main thing we should worry about for near IR is retinal exposure because it is conducted like visible light to the retina, but doesn't cause a blink reflex like bright visible light would. This is frequency dependent, as we can see from the following graph:

the region we care about actually has quite varied behavior; on the low side (760nm) around 80% of the power makes it to the retina, and almost none is absorbed by the cornea or lens; as we move higher, we see less than 50% makes it to the retina, with almost 50% split between the cornea and the lens. We'll see when we calculate 'retinal hazard', we weight the energy by a value that captures this transmittance– so the higher we go, the less we have to worry about the retina and the more we have to worry about the cornea and lens. Where we should focus our efforts depends on the wavelength we choose.

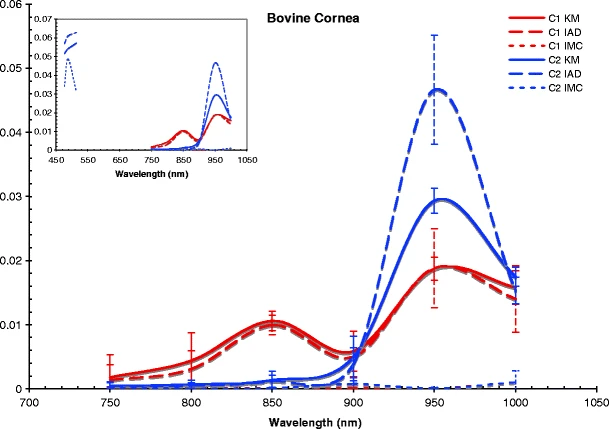

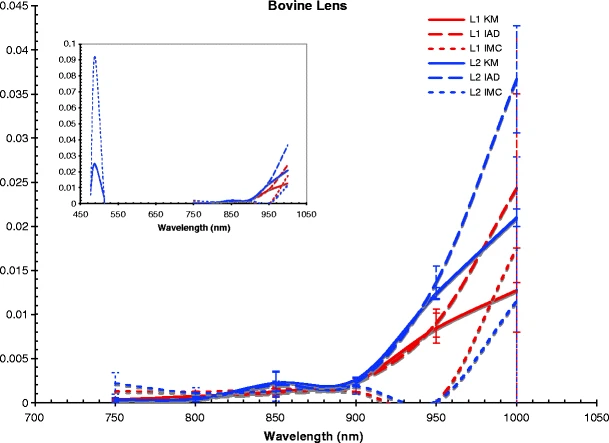

Another analysis of cow eye parts shows how they absorb over wavelengths (cows have pretty similar eyes to us):

For the cornea, we care about temperature equilibrium under continuous exposure. It turns out this is a complicated thing to understand and contextual, but we have a few interesting tidbits– the iris is very absorptive, the pupil is very dynamic (contracting from ~7mm in dark to ~1.6 mm in light), and blinks happen once every ~3 seconds for ~0.3 seconds (10% of the time our eye is closed!). That's not factoring in the other main mechanisms governing temperature– blood flow in the eye, conduction through tissue, body temperature, and ambient temperature. Ocular surface temperature can vary quite a bit depending on the weather!

Measurements

We care about power [W], which gets the name 'Radiant Flux' when we look at how much is delivered through an specific area, and 'Irradiance' [mW/cm^2] when we normalize by the area. Here, when we talk about the irradiance (power/area at the eye), we've accounted for the distance between the source and the eye already– the power per area obviously drops as we move further away. If we'd rather measure power through an area in a way that is distant agnostic, we can also talk about the 'Radiance' [mW/cm2/sr] where a steradian [sr] is a 3D radian which looks like a spotlight cone– this tells us how much power is delivered per area out in one small angular cone from a point source. The easiest way to think of this is that the area mm2 per angle sr grows as we move away from the source, so we have our power averaged over the squared mm per cone. Of course, we typically are interested in a frequency specific measurement for all of these quantities, so frequently they will be quoted normalized over a 1 nm band of wavelength (i.e. 'irradiance' per nm, which is still called 'irradiance').

IR-radiation is anything above visible light in wavelength on the spectrum between 760 nm (or 0.76 um) and 1 mm (or 1000 um) (remember, a higher/longer wavelength, in nm, means a slower frequency in Hz). It's typically divided into IR-A, -B, and -C or Near, Mid, and Far, but be careful because these distinctions don't map perfectly to each other. For the types of diodes and applications we care about, we'll be strictly in the shortest wavelength Near-IR/A range (760 nm-1400 nm).

In this range, the sun's irradiance at the earth's surface falls linearly from about 0.1 to 0.05 mW/cm2/nm, so we find ourselves in about 32 mW/cm2 over that entire 760-1400 nm bandwidth; however estimates of IR background exposure outside (when you're not looking directly at the sun) are roughly 1 mW/cm2.

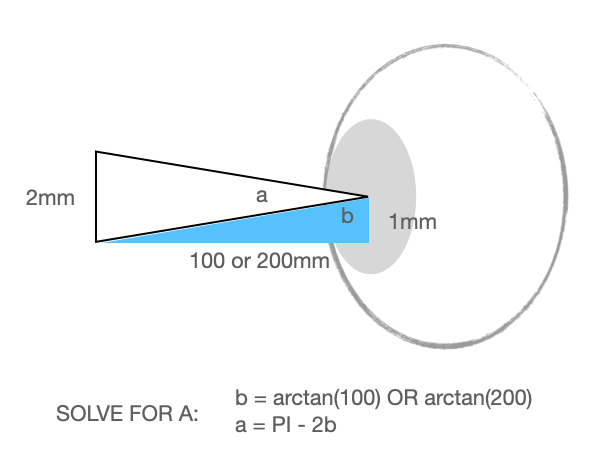

The final thing we need to understand before we move forward is 'angular subtense' (alpha), which is simply a way to describe how large something will be on the cornea (i.e., how large of a 3D cone described by angle alpha, in radians). It describes the minimum image size on the retina that can be produced by focusing on the object– what we care about is how much the eye can help concentrate the power of incoming waves on a small area.

See 'Location and size of the apparent source for laser and optical radiation ocular hazard evaluation' for a good review. It's worth noting that the lenses in our eyes changes shape to focus at different distances (accomodation) in addition to the changes in angle between our eyes when we gaze at something close vs. far way (vergence), and rules are different for collimated or coherent light.

Rules of Thumb for the Retina

In 'Eye Tracking Headset Using Infrared Emitters and Detectors' they calculated a retinal hazard threshold of 92 W/cm2/sr for 10 minute exposures.

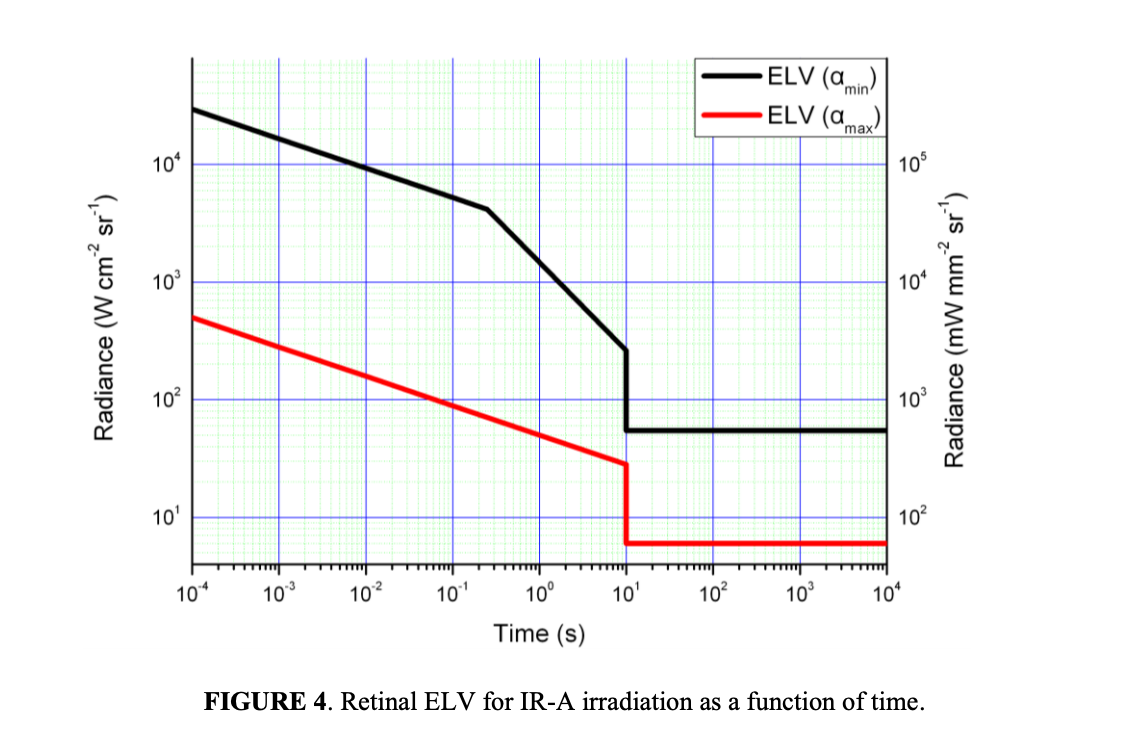

The ICNIRP guidelines 'On Limits of Exposure to Incoherent Visible and Infrared Radiation' suggest for long duration exposure, we should be less than 190o W/cm2/sr for small sources and less than 28 W/cm2/sr for large sources (smaller sources will diffuse as the eye moves more easily). The below plot shows between 6 and 60 W/cm2/sr depending on apparent size on the retina.

Regulation for the Retina

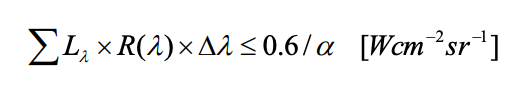

This formula is for retinal damage, based on the 'burn hazard effective radiance' for stimuli that don't come with a visual stimulus that would cause natural avoidance:

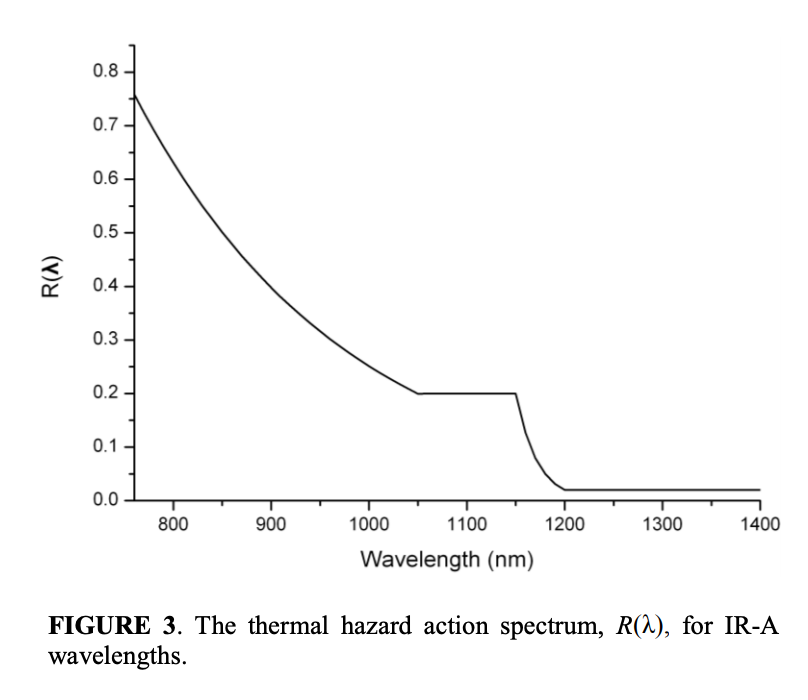

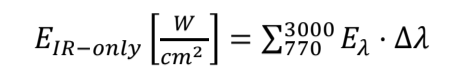

Where L(λ) is the average spectral radiance in a band (W/cm2/sr/nm) times the bandwidth range it's over, weighted by a unitless R function based on how much that frequency is transmitted to the retina. We sum this over all the bands in the range of interest.

There are several worst case assumptions that go with this– a fully dilated pupil (7mm diameter), and irradiance measured at 20cm (ANSI Z136.1) or 10cm (IEC 60825-1) because this is the lower limit of eye accomodation, which means it is the closest you can get to the eye where the eye will focus the power on the smallest section of the retina (worst case, closer makes it blurred). Notice the limit is a 'radiance' limit– that is, it assumes the worst case distance, and is simply the amount of radiated power in a given angular slice from the point source. Alpha is the 'angular subtense' of the source and is taken as 0.011 rad if the apparent source size is small than that.

For us, our LED is roughly 1.5x2.5mm, which we'll call 2mm. Let's do our basic math:

It's actually assumed the angular subtense increases over exposure time, as your eye moves and blurs the source around on the retina (which is good if we're trying not to damage it, hence the lower limit). Given the above alpha, the target is 0.6/0.02 = 30 W/cm2/sr.

The R values come from here (note there is an equation in the caption):

As we saw above earlier, the amount of energy that gets absorbed by the cornea and the lens at this frequency is actually quite a lot, so we have an R of ~0.33.

Now we need some idea of the Source Radiance over our bandwidth. Now we need to translate the following (from the datasheet) into power:

Unfortunately this is the only piece of information we have, other than that we can set the power through the diode with a current limiting resistor. We've set ours to about 25mW through the diode of electrical power. Current IR LED technology has a peak 'Wall-Plug Efficiency' of 40-50% (electrical to optical power), so we'll assume that our LED is doing really well and has an efficiency of 50%– and thus an optical power of around 12.5mW.

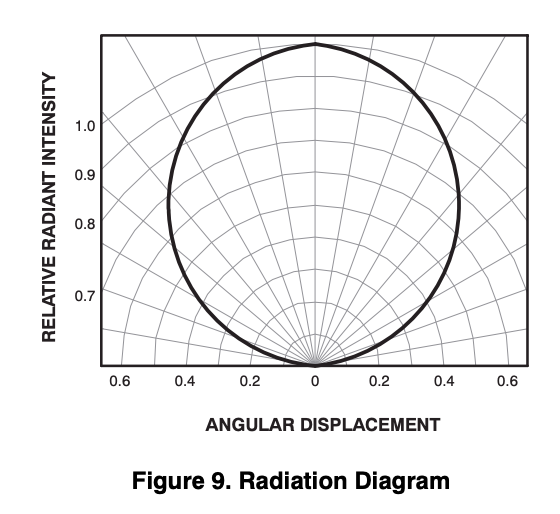

If we take the worst case (most intense) angle above, +-10 degrees has an average intensity of 0.98, and appears to capture about 18.5% of the total radiated power (Calculated by looking at average intensity across each 10 degree band). So we'll guess that, right in the middle of the beam, we get around 2.5mW over a 20 degree angle (0.35 radians). This could be calculated more accurately using Lambertian assumptions (LEDs typically have a well defined emission pattern), but this back of the envelope should be fine.

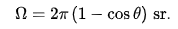

Step 1 is to turn that 2D cross section to a 3D cone steradian– not a trivial task. Stack Overflow and Wikipedia tell us that for angle 2θ (θ=0.175 radians for us):

So we get 0.096 sr. Our 2.5mW thus gives 26.05 mW/sr. We then divide this by the size of our radiating source (4 mm2 = 0.04 cm2) because it's not a perfect point source, so we need to account for its area (if this is confusing, take a look at the light bulb drawing on this website). We get 650 mW/cm2/sr.

This value is assuming all of the power across all frequencies that the diode is converting to light (its entire bandwidth). If we want to convert this into a bandwidth agnostic number, we have to divide out our expected bandwidth (probably on the order of 20-50nm), but that would be silly since we really just care about total delivered power in the equation above. If we apply our R value, we get a weighted 650*0.33 = 215 mW/cm2/sr over our entire bandwidth.

That 0.215 W number is quite a ways away from the 30W limit we calculated; keep in mind as well that we used worse case metrics for this as well (a fully dilated pupil, from the worst case focal distance, right in the hot spot of the LED). In reality we should expect something smaller; it seems we're good to go for unlimited time as far as the retina is concerned.

Rules of Thumb for the Cornea/Lens

Roughly, background IR from sun exposure at the eye is ~1 mW/cm2, though we apparently get between 20-40 mW/cm2 on our skin.

Occupationally, we see rates of 15-180 mW/cm2 daily for glass blowers at the eye, and 200-600 mW/cm2 for metal workers. Over a decade lead to extreme increase in cataract risk.

In 'Eye Tracking Headset Using Infrared Emitters and Detectors' they set their safety limit at 14.8 mW/cm2 for 10 minute exposures. (they apparently use 5 LEDs, as well as the QRE1113).

Regulations for the Cornea/Lens

The Cornea and Lens area are probably more of a concern, because that's where the cataracts occur, these structures are moderately absorptive at these frequencies, and the sun background exposure typically doesn't get very high.

The International Commission of Non-Ionizing Radiation Protection (ICNIRP)/ IEC-62471 suggests ocular exposure should not exceed 10 mW/cm2 for chronic exposure, though around 100 mW/cm2 is the suggested tolerance for IR lasers– these recommendations are to protect the cornea. The formula is:

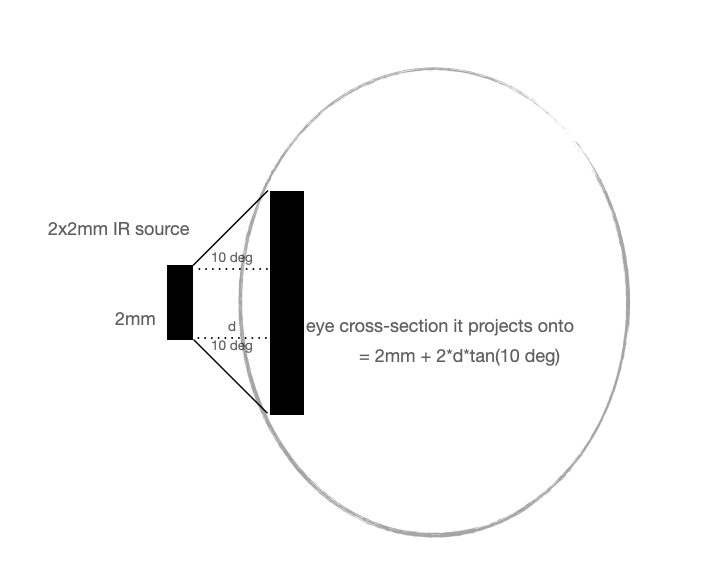

We can get at the basic rule of thumb here– our diode puts out 12.5 mW from a 2x2mm source– that's ~312.5 mW/cm2 directly at the source (if we stuck it right on our eyeball). It's probably ill-advised to do this, even if it would only be heating a very small part of the eye.

for our 2x2 source, let's go back to our most intense direct emission, which was within the 20 degrees directly in front of the diode. We saw this captures about 20% of the radiated power, or 2.5mW.

So we can solve the minimum safety distance for our example with the most concentrated part of the beam (2.5mW source power radiating at a 10 degree angle from a 2x2mm) by solving (sqrt(2.5/10)-.2)/(2*tan(10 deg)). Be careful with units– everything should be in cm since our target value uses them.

To hit the 10mW/cm2 safety target, we need to be ~8.5 mm away. Another way to do this calculation is based on the beam divergence that includes 63% of the power, but assume it includes 95% of the power. That gives a 70 degree beam at 12.5mW and a safe distance of 6.5 mm.

For a sense of scale, your eyelid itself is around 1 mm thick, and eyelashes are longer than 10 mm on average. Based on our measurements of eye distance from the sensor, we should be far enough away not to worry (and these calculations assume really the worst case– very close, very direct and concentrated power, your eye isn't moving around, plus a significant overestimates of concentrated power) and of course the recommendations have margin. Moreover, power falls off with distance squared, so any extra distance adds an exponential safety margin.

Moreover, the real place we're concerned about is the lens, as well, which is further from the diode and unlikely to spend time in continuous direct exposure. These recommendations are made with the assumption that the irradiance is constant in the space, bathing the whole eye– obviously the eye will be able to conduct heat away in our case more easily because only the nearest part of the eye will experience the peak irradiance, and that part is still under the safety margin for the geometry we've built.

Other People's Work

'Eye Tracking Headset Using Infrared Emitters and Detectors' set their safety limit for 10 minute exposures; they apparently use 5 LEDs together, which seem to have all been QRE1113s.

'DualBlink' uses the QRE1113 set at 20mW.

In 'Class I infrared eye blinking detector' they do a thorough analysis on their system, which includes a Agilent HSDL9100-21 IR-transmitter and receiver (940nm, 50nm bandwidth, 1.8mm source, apparent source size of 0.8mm, 26 degree beam divergence, which is the area for 63% of power). They directly measured 7.6 uW of Radiant flux in a standards compliant way, and using the strictest standards designed it to be operational for 8 hours a day with a 1kHz sampling period; they use IEC 60825-1, which treats Lasers and LEDs the same and thus dramatically over-estimates the risk.

According to Eye safety related to near infrared radiation exposure to biometric devices:

Eye safety is not compromised with a single LED source using today's LED technology, having a maximum radiance of approximately 12 Wcm−2 sr−1 [12]. Multiple LED illuminators, however, may potentially induce eye damage if not carefully designed and used.

Best References

ICNIRP GUIDELINES ON LIMITS OF EXPOSURE TO INCOHERENT VISIBLE AND INFRARED RADIATION.

EHS Document on Light and Near-Infrared Threshold Limit Values.

Eye safety related to near infrared radiation exposure to biometric devices.